In a ground-breaking development, researchers at the University of California – San Francisco and UC Berkeley have successfully used AI to enable a woman with severe paralysis resulting from a brainstem stroke to communicate through a digital avatar using a brain-computer interface (BCI). This remarkable achievement marks the first instance of synthesizing both speech and facial expressions directly from brain signals.

The BCI system, created by Dr. Edward Chang and his team, is not only capable of translating brain signals into text at a remarkable speed of nearly 80 words per minute but also decoding these signals into the richness of speech and accompanying facial movements. This breakthrough, published in the journal *Nature* on August 23, 2023, holds the promise of a future where individuals who have lost their ability to speak can regain it through technological means.

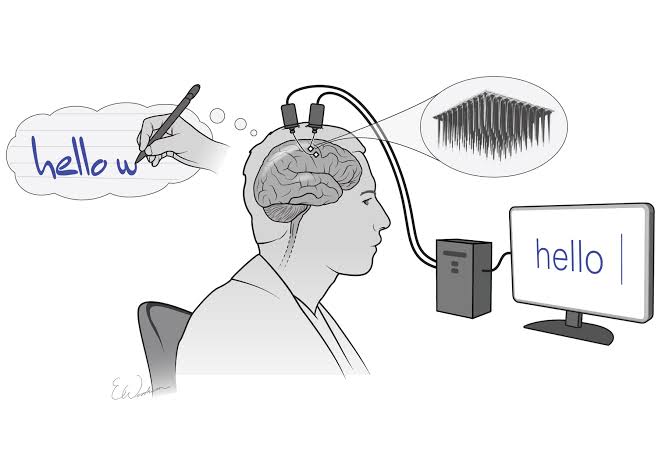

Chang’s team implanted a thin array of electrodes onto the woman’s brain’s surface, precisely positioned over critical areas for speech. These electrodes intercepted brain signals that would have otherwise controlled the muscles responsible for speech and facial expressions. The captured signals were then transmitted to a computer system through a cable connected to the participant’s head.

How AI managed to give back Voice to Paralyzed Woman

The participant underwent weeks of training to enable the system’s artificial intelligence to recognize her specific brain activity patterns associated with speech. Unlike conventional approaches that focus on recognizing entire words, the researchers designed a system that decodes words from phonemes—the building blocks of speech. By doing so, the computer only needed to learn 39 phonemes to decode any English word, significantly enhancing accuracy and speed.

To recreate her voice, AI powered algorithm was developed to synthesize speech, personalized to sound like her voice before the injury, using a recording of her speaking. The team also employed software to animate the avatar’s facial movements, translating brain signals into jaw movements, lip motions, and facial expressions.

The potential of this technology is immense, not only for restoring the ability to communicate but also for fostering independence and enriching social interactions. The research team’s next goal is to create a wireless version of the BCI system, eliminating the need for physical connections and thereby enhancing user mobility.

This scientific achievement not only signifies a pivotal moment in medical technology but also offers renewed hope for individuals facing severe communication challenges due to paralysis or similar conditions. The convergence of artificial intelligence and neuroscience has the potential to reshape lives, allowing speech and expression to flourish even in the face of physical limitations.